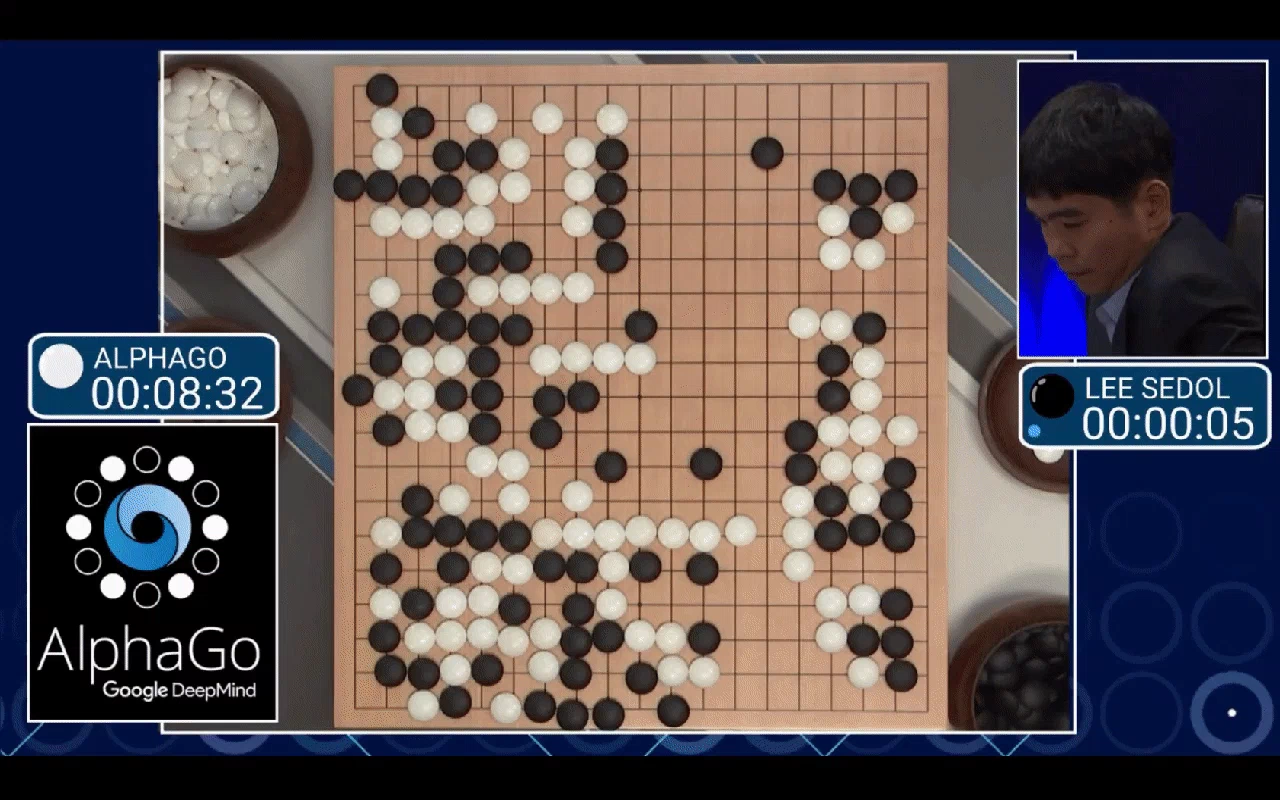

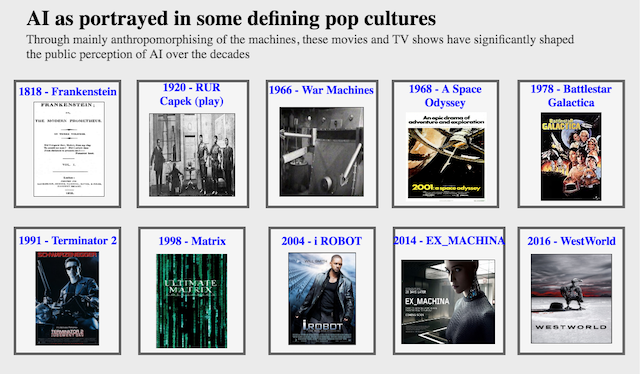

Science fiction and pop culture have presented AI in myriad ways to the audience over the decades. While it is undeniable that these depictions have inspired many a inventions, the more important ramification of this has been in the way of shaping the public perception of AI. This has led to a far greater

The common theme in all these depictions has always remained the same. Associating self-awareness, human like consciousness to machines and the fact that through these characteristics the machines will rise and fight the human race eventually lording superior over the humans. While this makes for entertaining viewing, it also leaves a very lasting misleading perception on the audience. And since these themes have kept recurring over so many years, there is a strong consensus that with growing capabilities of AI, the doom's day scenario is inevitable.

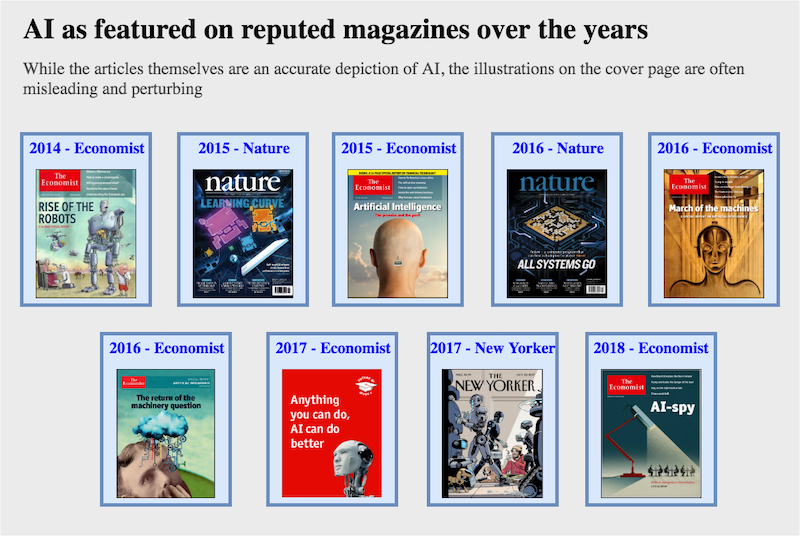

The ubiquitous applications of AI over the last few years has naturally led to ever increasing coverage in media and news. In the era of clickbaits, it seems even the reputed magazines have followed the suit by having very dubious illustrations on cover pages. While the articles themselves depict an accurate picture of the current state of AI as you would expect from such reputed magazines, the cover page illustrations are often eye raising in order to catch people's attention.

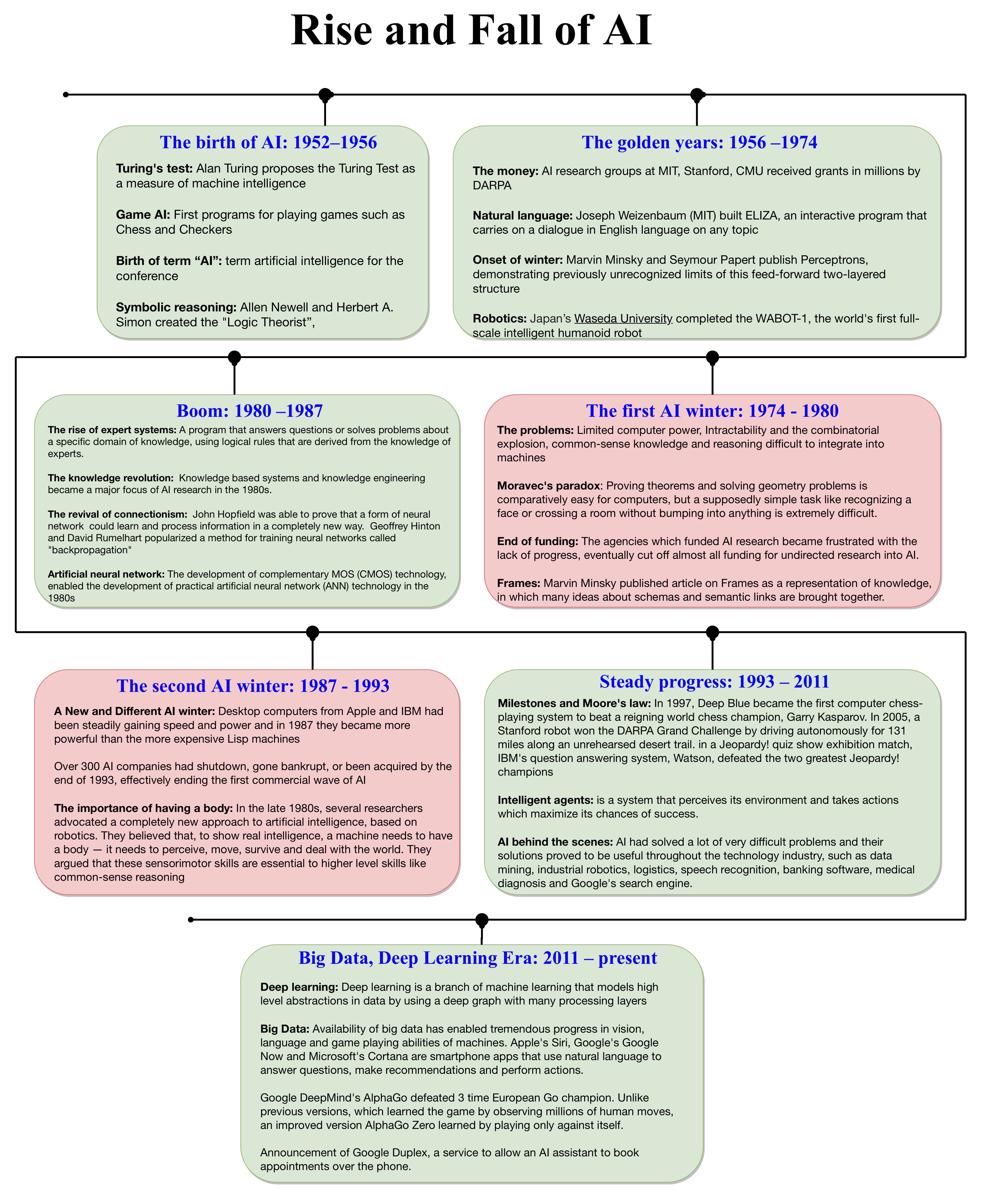

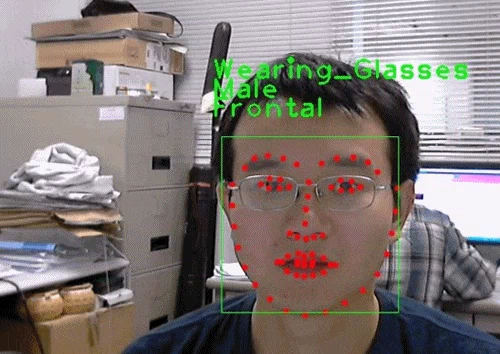

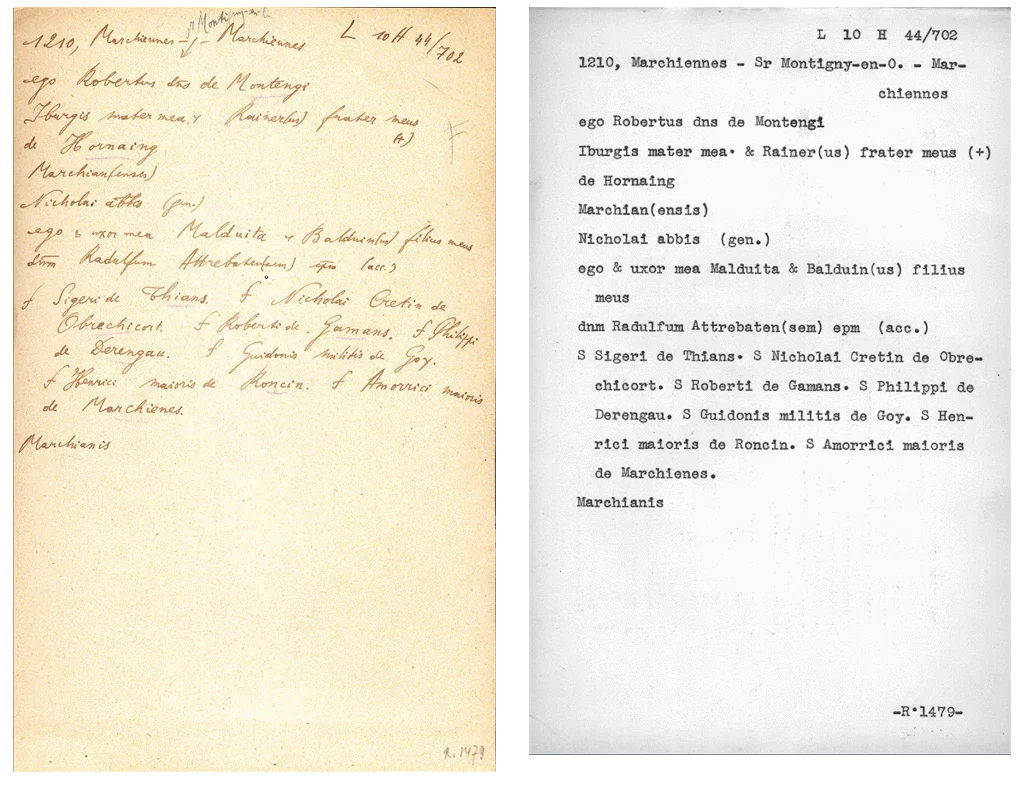

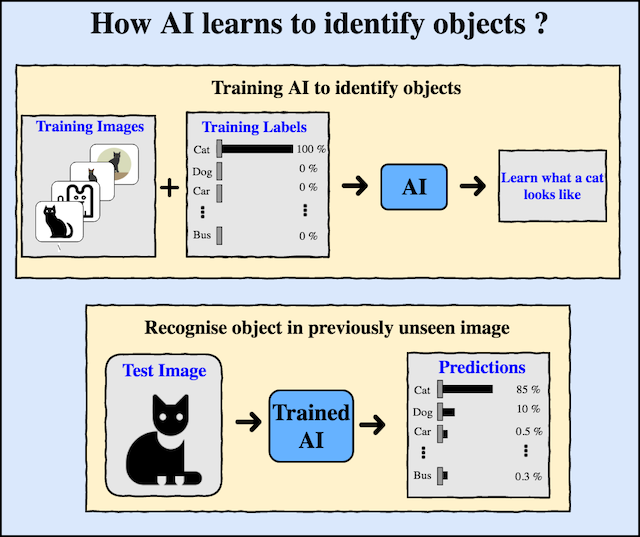

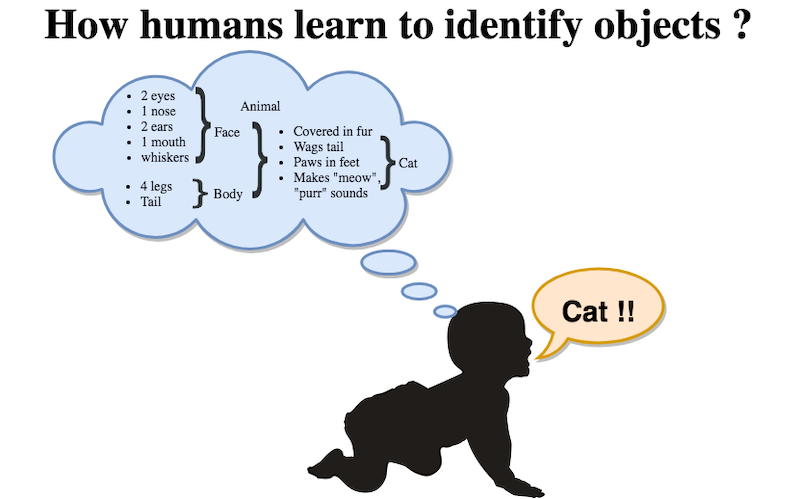

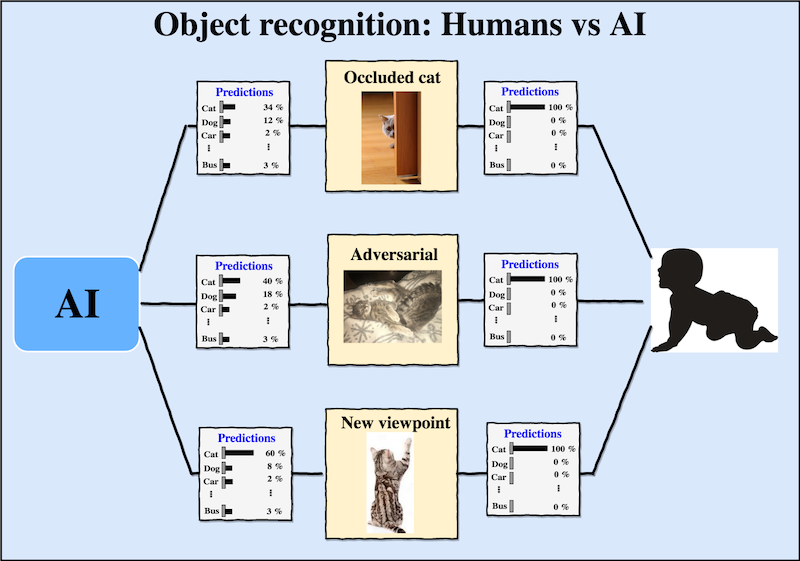

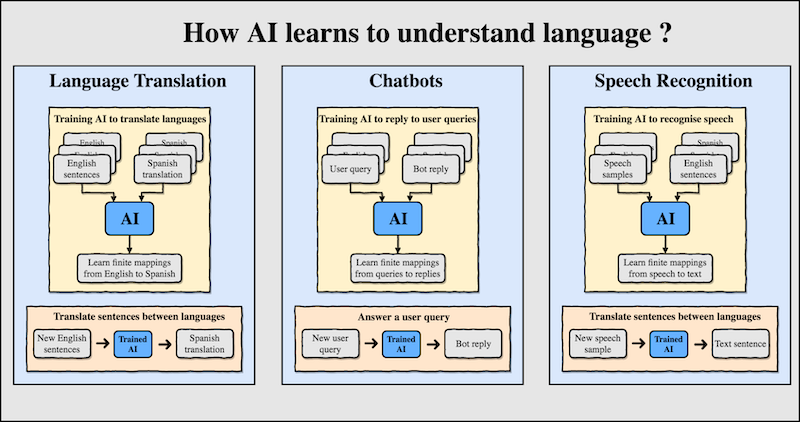

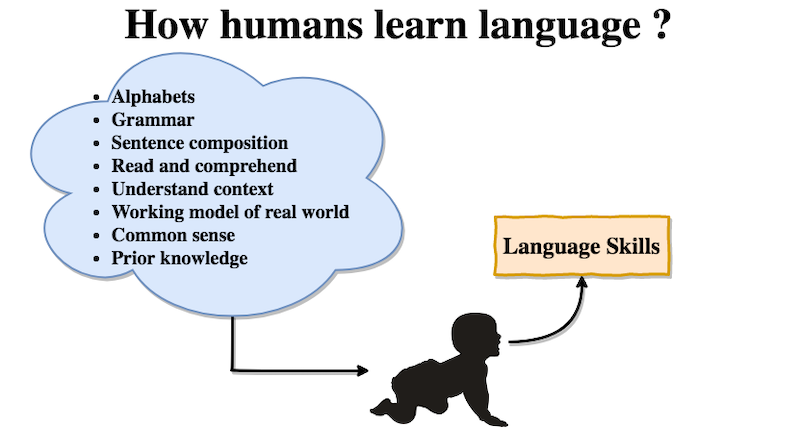

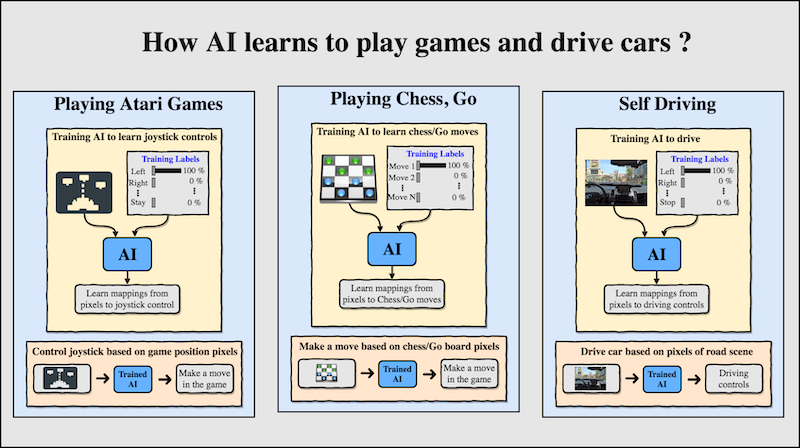

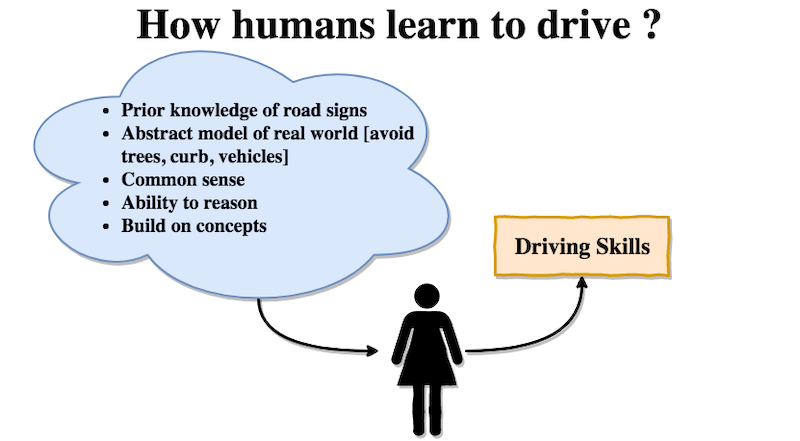

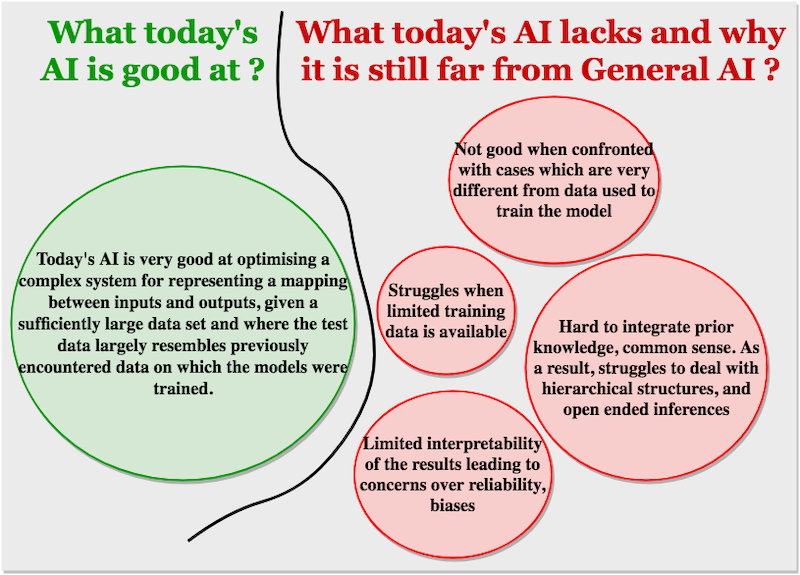

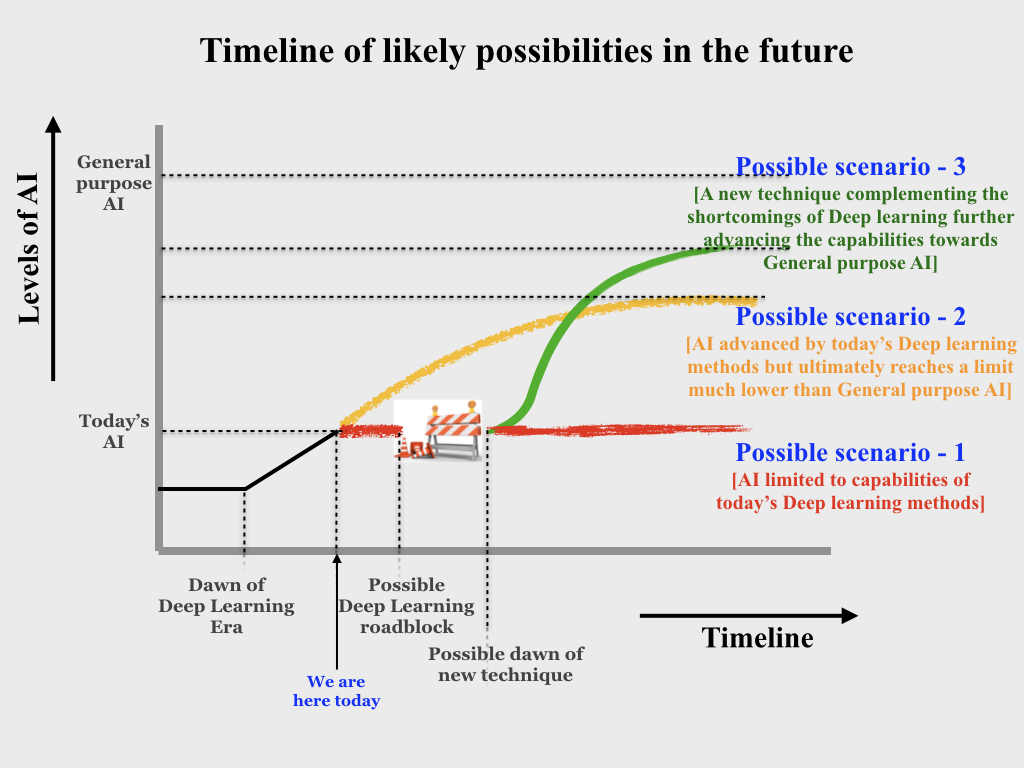

As a result of all these, the overall opinion of general public about AI is more of skeptical rather than the belief in the potential of numerous applications of AI. All this while the domain experts keep getting frustrated with widespread misuse of AI's potrayal to public. Explaining the underlying models and algorithms using math is certainly not an option. In this regard, this article is an attempt to explain intuitively the underlying workings of today's AI systems, present the current state of AI and in the process hopefully alleviate the growing fear among the people regarding the rise of AI.