Explanation to Cooperation

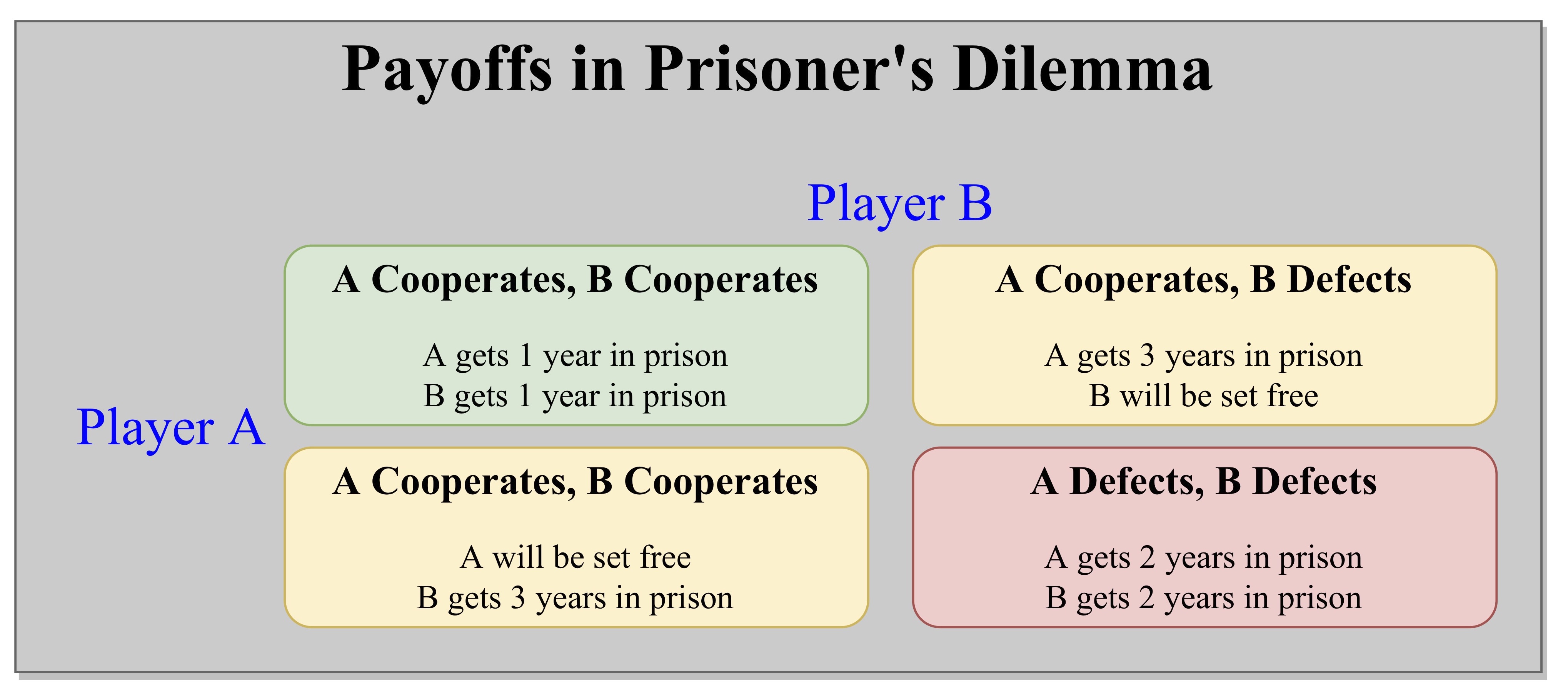

For many years, the prisoner's dilemma game pointed out that even if all members of a group would benefit if all cooperate, individual self-interest may not favor cooperation. The prisoner's dilemma codifies this problem and has been the subject of much research, both theoretical and experimental. Results from experimental economics show that humans often act more cooperatively than strict self-interest would seem to dictate. One reason may be that if the prisoner's dilemma situation is repeated (the iterated prisoner's dilemma), it allows non-cooperation to be punished more, and cooperation to be rewarded more, than the single-shot version of the problem would suggest. It has been suggested that this is one reason for the evolution of complex emotions in higher life forms.

Game theory

Game theory could not find a strategy for the game of Prisoner's Dilemma. Most of the games that game theory had heretofore investigated are zero-sum – that is, the total rewards are fixed, and a player does well only at the expense of other players. But real life is not zero-sum. Our best prospects are usually in cooperative efforts. In fact, the strategy of Tit-For-Tat cannot score higher than its partner; at best it can only do "as good as". Yet it won the tournaments by consistently scoring a strong second-place with a variety of partners.

Darwinian context

Darwin's theory of evolution is explained on basis of survival of the fittest. Species are pitted against species for shared resources, similar species with similar needs and niches even more so, and individuals within species most of all. All this comes down to one factor: out-competing all rivals and predators in producing progeny. Darwin's explanation of how preferential survival of the slightest benefits can lead to advanced forms is the most important explanatory principle in biology, and extremely powerful in many other fields. Such success has reinforced notions that life is in all respects a war of each against all, where every individual has to look out for himself, that your gain is my loss. To explain this contradiction then becomes a challenge.

Darwin's explanation of how evolution works is quite simple, but the implications of how it might explain complex phenomena are not at all obvious; it has taken over a century to elaborate. Explaining how altruism – which by definition reduces personal fitness – can arise by natural selection is a particular problem, and the central theoretical problem of sociobiology. A possible explanation of altruism is provided by the theory of group selection which argues that natural selection can act on groups: groups that are more successful – for any reason, including learned behaviors – will benefit the individuals of the group, even if they are not related. It has had a powerful appeal, but has not been fully persuasive, in part because of difficulties regarding cheaters that participate in the group without contributing.

In a 1971 paper, Robert Trivers demonstrated how reciprocal altruism can evolve between unrelated individuals, even between individuals of entirely different species. And the relationship of the individuals involved is exactly analogous to the situation in a certain form of the Prisoner's Dilemma. The key is that in the iterated Prisoner's Dilemma, both parties can benefit from the exchange of many seemingly altruistic acts. As Trivers says, it "takes the altruism out of altruism." The premise that self-interest is paramount is largely unchallenged, but turned on its head by recognition of a broader, more profound view of what constitutes self-interest.

In Conclusion

It does not matter why the individuals cooperate. The individuals may be prompted to the exchange of "altruistic" acts by entirely different genes, or no genes in particular, but both individuals can benefit simply on the basis of a shared exchange. In particular, "the benefits of human altruism are to be seen as coming directly from reciprocity – not indirectly through non-altruistic group benefits". Trivers' theory is very powerful. Not only can it replace group selection, it also predicts various observed behavior, including moralistic aggression, gratitude and sympathy, guilt and reparative altruism, and development of abilities to detect and discriminate against subtle cheaters. The benefits of such reciprocal altruism was dramatically demonstrated by a pair of tournaments held by Robert Axelrod around 1980.

The tournament's dramatic results showed that in a very simple game the conditions for survival (be "nice", be provocable, promote the mutual interest) seem to be the essence of morality. While this does not yet amount to a science of morality, the game theoretic approach has clarified the conditions required for the evolution and persistence of cooperation, and shown how Darwinian natural selection can lead to complex behavior, including notions of morality, fairness, and justice. It is shown that the nature of self-interest is more profound than previously considered, and that behavior that seems altruistic may, in a broader view, be individually beneficial.